Since joining the Green Software Foundation’s Real Time Cloud working group, every two weeks we’ve been joining calls with other producers and consumers of cloud emissions data to make working with it easier. Each time, we meet to understand the complexities accurately reporting data about the environmental impact of using cloud services, so we can reflect this in our own work. In this post, Chris gives an update on what we’ve learned, what’s been produced, and what work has gone on since our last post in April 2024.

What is the Real Time Cloud Working Group and why would anyone join in the first place?

I’m glad you asked!

When we say Real Time Cloud Working Group, we’re referring to the working group within the Green Software Foundation, with the title Real Time Energy and Carbon Standard for Cloud Providers. That’s quite a mouthful, so a lot of the time Real Time Cloud is used instead.

Anyway, one of the problems you quickly encounter when you want to understand the environmental impact of using the cloud services you use is that:

- every cloud provider reports their impacts slightly differently

- reporting gets complex very quickly

Together, these can be a real pain if you want to make credible statements about say… the carbon emissions associated with your usage of cloud services.

Why would you care about knowing this?

We care about this because we care about reaching a fossil free internet by 2030, and what we’ve found is that there are a growing number of people who care about understanding how to make the use of cloud services greener too.

But even if you don’t, in many parts of the world, there is a growing body of law covering this, so you might want to know anyway.

In Europe for example, if you use cloud services and your organization is covered by the Corporate Sustainability Reporting Directive, the use of cloud computing is explicitly called out now in the law. Here’s some of the text from the reporting standards that spell out what to include (in this quote, “undertaking”, loosely translated from the legal jargon means “company”):

If it is material for the undertaking’s Scope 3 emissions, it shall disclose the GHG emissions from purchased cloud computing and data centre services as a subset of the overarching Scope 3 category “upstream purchased goods and services”.

European Sustainability Reporting Standards ESRS – AR51

(As an aside, here’s also linked directly to the corresponding standard, so you can share a link to this text in bright red letters, when people ask about you about this in future)

OK, now we understand the problem, what has the working group been up to?

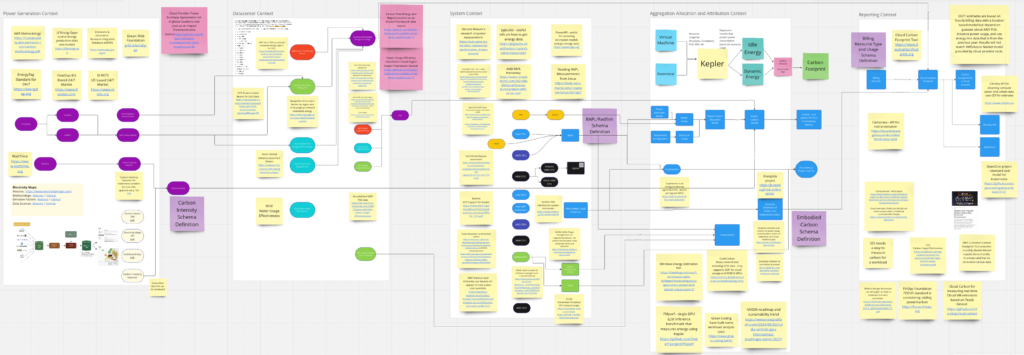

One of the key tasks led by the two chairs Adrian Cockroft and Pindy Bhullar has been to map out all the various sources of data that influence the numbers you might use for reporting carbon emissions from cloud.

This is no small task, and there is now a detailed (if somewhat daunting) Miro board listing all of these. We’ve shared a thumbnail screenshot below, and if you follow the link, you’ll end up on the public Miro board, where you can peruse the notes and data.

The other has been to create a single normalised dataset that covers all the cloud regions from the 3 largest providers that make up more than 80% of the cloud market, so there are consistent and comparable numbers that you can use to report emissions from cloud if you are using services from more than one provider. To use the wording from the project:

The cloud providers disclose metadata about regions on an annual basis. This data may include Power and Water Usage Effectiveness, carbon free energy percentage, and the location and grid region for each cloud region. This project is gathering and releasing this metadata as a single data source, and lobbying the cloud providers to release data that is aligned across providers.

From “Real Time Energy and Carbon Standard for Cloud Providers”

This information is complementary to the information we publish ourselves, and currently goes into more detail than we do in some cases. We’d also like to support this kind of detail ourselves in our platform, so getting familiar with the data ahead of time helps when thinking through the required updates for our own platform.

Where’s the data?

We can’t tease you about data without sharing data, and the good news is that since the last update, there have been multiple “releases” of data – one big “official” one in August 2024, and most recently another, “dev” snapshot in December 2024.

You can see them on the Real Time Cloud project website on Github, but for convenience we’ve also uploaded them to our datasets website, at datasets.greenweb.org. We are using the project Datasette to host the data in a form that allows for easier exploration, and linking to filtered views of the data.

Want to see a faceted view of all the cloud regions from the biggest three providers, with carbon data, grouped by year? Here’s that link. We’ve shared a screenshot below to give an idea of what to expect:

Want to see the greenest regions from Google Cloud, based on the actual power consumed, in order of “greenness”? Here’s a link for that too.

Under the hood, this data is a read-only sqlite database, and you’re free to run your own queries against this dataset. You can do this by clicking the ‘view and edit’ SQL and then come with a specific view you want to share – give it a go!

Where next?

Cleaning data is labour intensive task, and a massive amount of this has relied on the efforts of ex-AWS VP of Sustainability Adrian Cockroft, since he left the cloud giant. There have also been a steady flow of contributions from people in organisations who require this information themselves, and also from folks within large companies like Microsoft and Google, who have an interest in this data being reported fairly and accurately.

For our part, we’ve been working to prepare the dataset to make it easier for other orgs to use in their own software (and figure out how best to use it in our own platform, too).

We’re hoping that new disclosures in 2025 will help lead to a more fleshed out dataset as new data comes into the public domain, but right now, having this dataset in the public domain is a significant step ahead from where things were before the project started.

All the work is in the open on github, so if this looks interesting, we encourage you to get involved.